Who Leaked the Model? Tracking IP Infringers in Accountable Federated Learning

Abstract

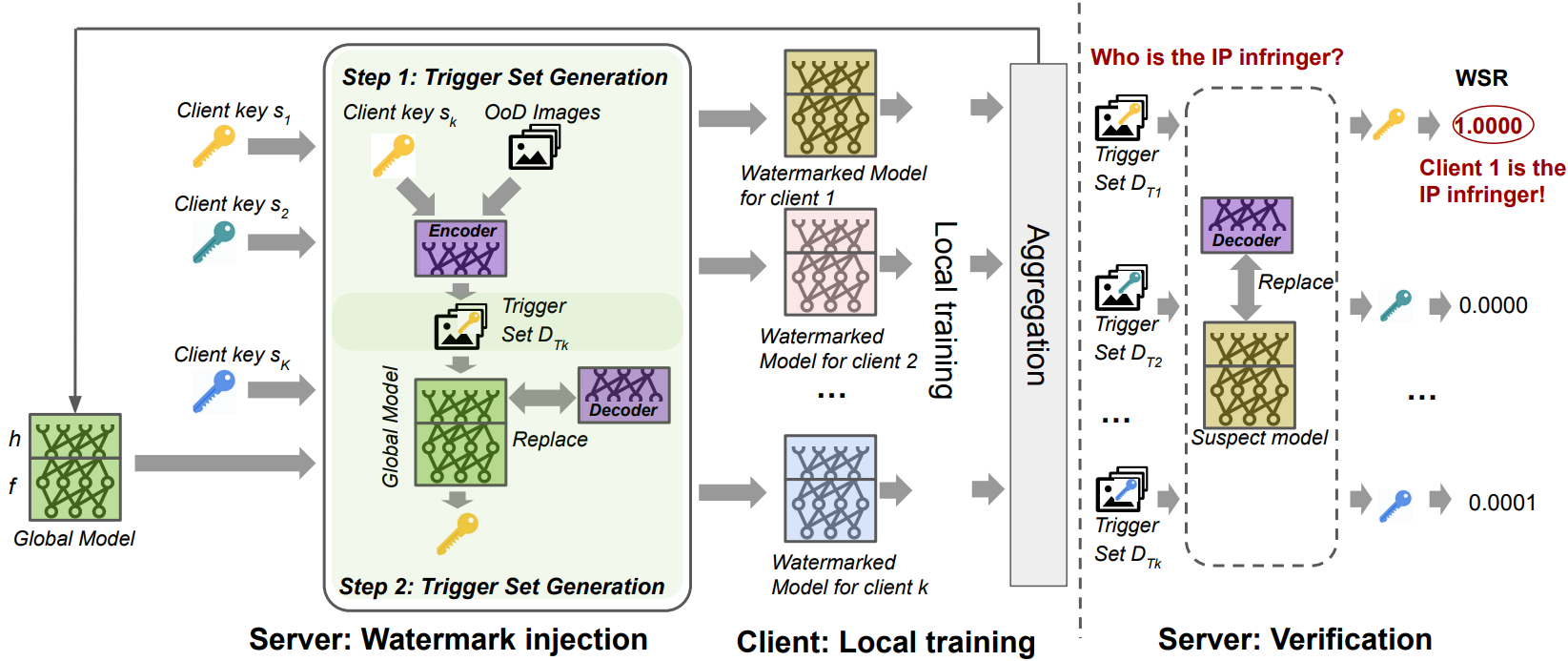

Federated learning (FL) emerges as an effective collaborative learning framework to coordinate data and computation resources from massive and distributed clients in training. Such collaboration results in non-trivial intellectual property (IP) represented by the model parameters that should be protected and shared by the whole party rather than an individual user. Meanwhile, the distributed nature of FL endorses a malicious client the convenience to compromise IP through illegal model leakage to unauthorized third parties. To block such IP leakage, it is essential to make the IP identifiable in the shared model and locate the anonymous infringer who first leaks it. The collective challenges call for accountable federated learning, which requires verifiable ownership of the model and is capable of revealing the infringer’s identity upon leakage. In this paper, we propose Decodable Unique Watermarking (DUW) for complying with the requirements of accountable FL. Specifically, before a global model is sent to a client in an FL round, DUW encodes a client-unique key into the model by leveraging a backdoor-based watermark injection. To identify the infringer of a leaked model, DUW examines the model and checks if the triggers can be decoded as the corresponding keys. Extensive empirical results show that DUW is highly effective and robust, achieving over 99% watermark success rate for Digits, CIFAR-10, and CIFAR-100 datasets under heterogeneous FL settings, and identifying the IP infringer with 100% accuracy even after common watermark removal attempts.